If you are interested with our study, please contact xingqunqi@gmail.com

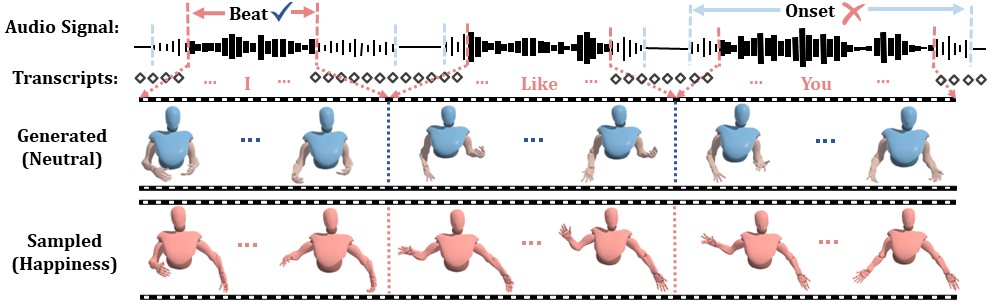

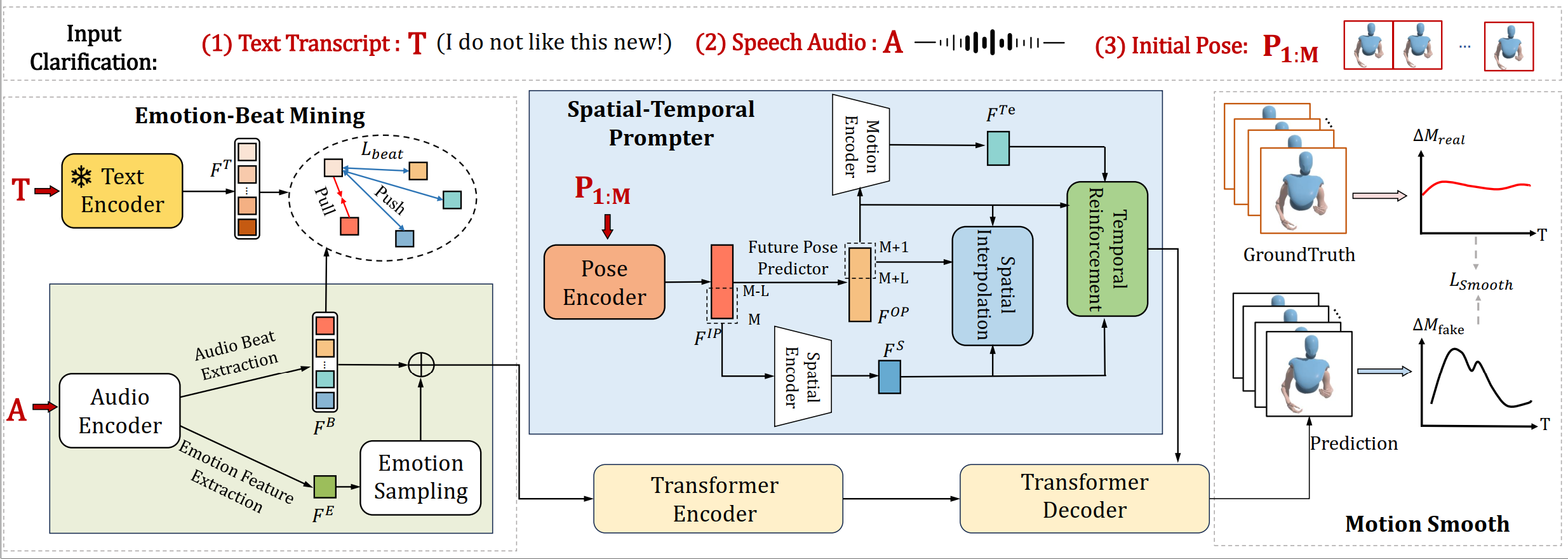

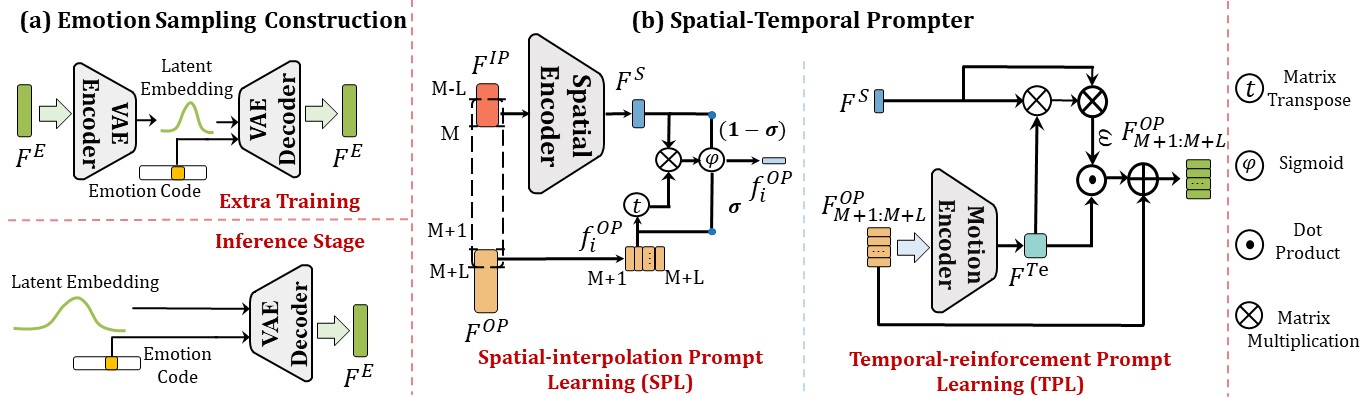

Generating vivid and diverse 3D co-speech gestures is crucial for various applications in animating virtual avatars. While most existing methods can generate gestures from audio directly, they usually overlook that emotion is one of the key factors of authentic co-speech gesture generation. In this work, we propose EmotionGesture, a novel framework for synthesizing vivid and diverse emotional co-speech 3D gestures from audio. Considering emotion is often entangled with the rhythmic beat in speech audio, we first develop an Emotion-Beat Mining module (EBM) to extract the emotion and audio beat features as well as model their correlation via a transcript-based visual-rhythm alignment. Then, we propose an initial pose based Spatial-Temporal Prompter (STP) to generate future gestures from the given initial poses. STP effectively models the spatial-temporal correlations between the initial poses and the future gestures, thus producing the spatial-temporal coherent pose prompt. Once we obtain pose prompts, emotion, and audio beat features, we will generate 3D co-speech gestures through a transformer architecture. However, considering the poses of existing datasets often contain jittering effects, this would lead to generating unstable gestures. To address this issue, we propose an effective objective function, dubbed Motion-Smooth Loss. Specifically, we model motion offset to compensate for jittering ground-truth by forcing gestures to be smooth. Last, we present an emotion-conditioned VAE to sample emotion features, enabling us to generate diverse emotional results. Extensive experiments demonstrate that our framework outperforms the state-of-the-art, achieving vivid and diverse emotional co-speech 3D gestures.

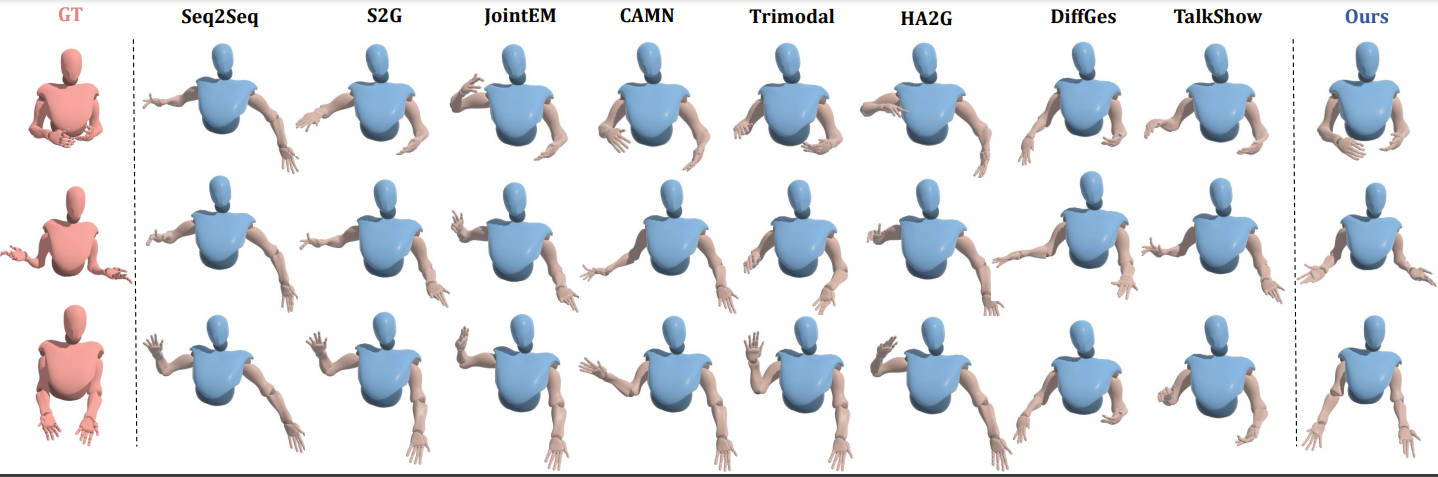

Visualization of our predicted 3D hand gestures against various state-of-the-art methods. From top to bottom, we show the three keyframes (an early, a middle, and a late one) of a pose sequence. Best view on screen.

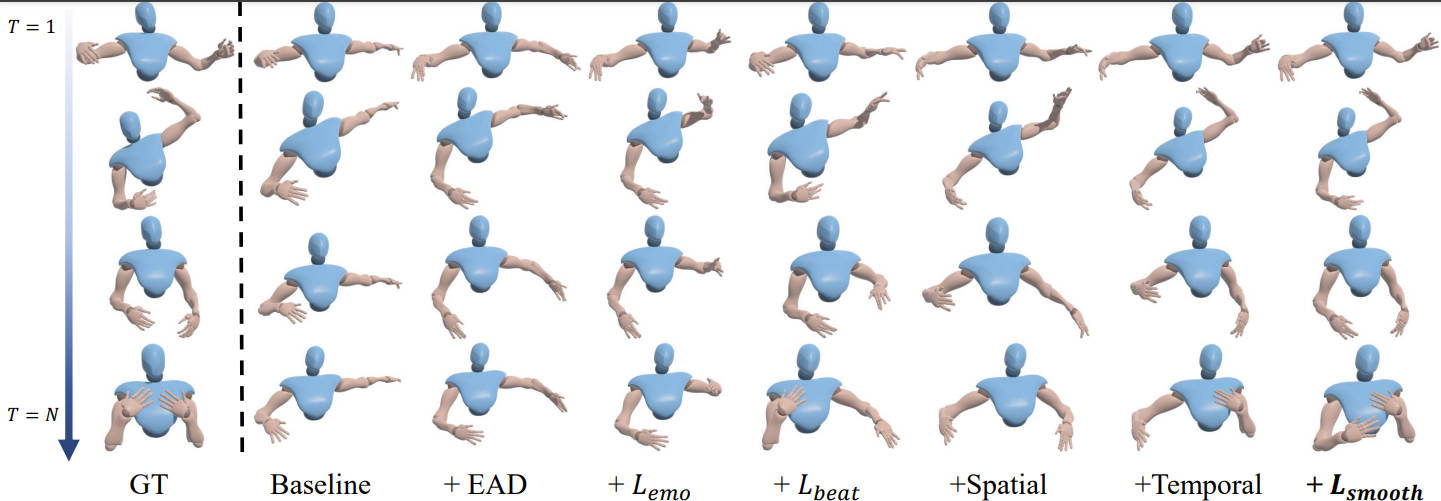

Visual comparisons of ablation study. We show the key frames of the generated gestures. From top to bottom, we show four key frames (an early, two middle, and a late one) of a pose sequence. Best view on screen.

Visual Results Generated by Seq2seq.

Visual Results Generated by CAMN.

Visual Results Generated by DiffuGesture.

Visual Results Generated by HA2G.

Visual Results Generated by S2G.

Visual Results Generated by Trimodal.

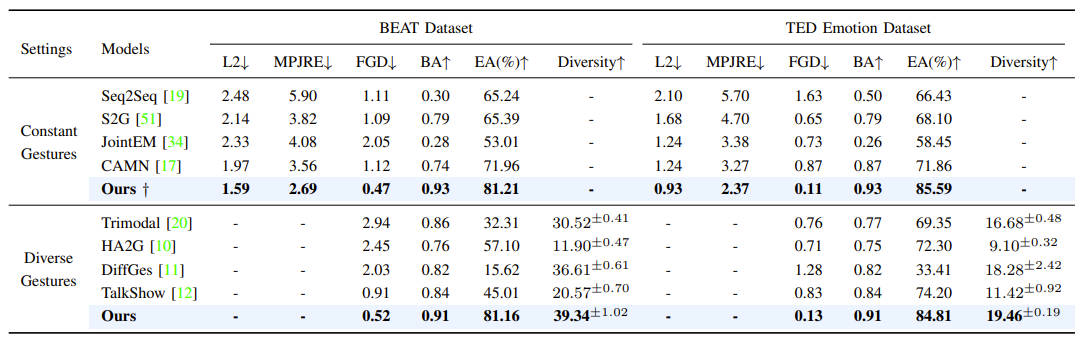

Comparison with the state-of-the-art methods on the BEAT dataset and TED Emotion dataset of the generated co-speech gestures. ↓ indicates the lower the better, and ↑ indicates the higher the better. ± means 95% confidence interval. † means our EmotionGesture framework is implied without the sampling phase during inference.

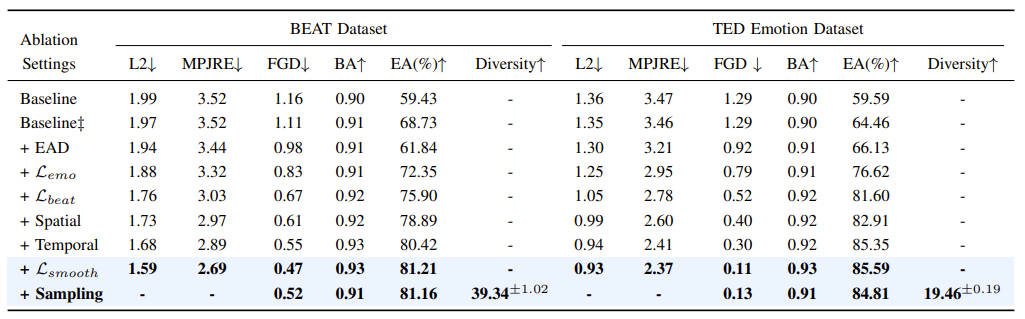

Ablation study on different loss functions and different components of our proposed EmotionGesture framework. ↓ indicates the lower the better, and ↑ indicates the higher the better. ± means 95% confidence interval. ‡ denotes we directly concatenate the one-hot emotion label with audio features as input. "Spatial" means the spatial-interpolation prompt learning, and "Temporal" denotes the temporal-reinforcement prompt learning. "+" indicates that we continue to add the corresponding component or loss function upon "Baseline", sequentially. Notice that only with the sampling setting our framework could achieve diverse co-speech gesture synthesis.

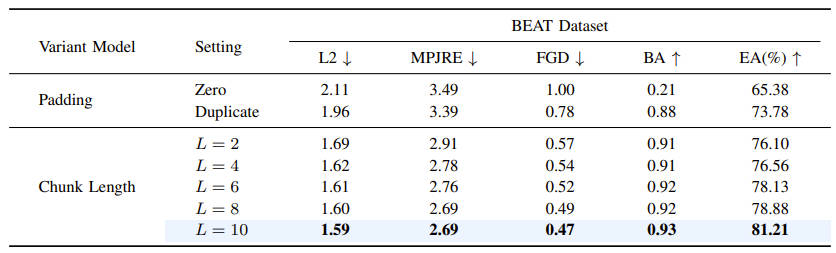

Ablation study on the influence of transition chunk length L in the spatial-temporal prompter. ↓ indicates the lower the better, and ↑ indicates the higher the better. "Duplicate" indicates repeating the initial poses to achieve the same temporal dimension as the target ones. Similarly, "Zero" means directly padding the temporal dimension of initial poses with zero elements. Notice that in the padding strategy, we drop the spatial-temporal prompter in the experiments.

@misc{qi2023emotiongesture,

title={EmotionGesture: Audio-Driven Diverse Emotional Co-Speech 3D Gesture Generation},

author={Xingqun Qi and Chen Liu and Lincheng Li and Jie Hou and Haoran Xin and Xin Yu},

year={2023},

eprint={2305.18891},

archivePrefix={arXiv},

primaryClass={cs.CV}

}If you are interested with our study, please contact xingqunqi@gmail.com