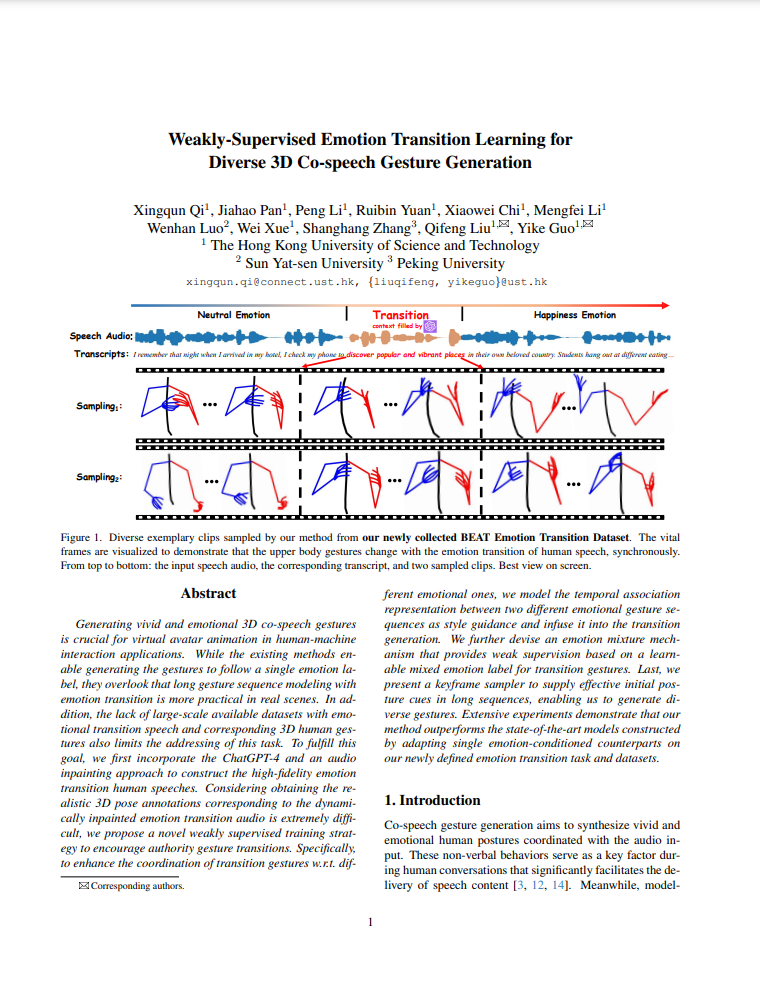

Figure 1. Diverse exemplary clips sampled by our method from our newly collected BEAT Emotion Transition Dataset. The vital frames are visualized to demonstrate that the upper body gestures change with the emotion transition of human speech, synchronously. From top to bottom: the input speech audio, the corresponding transcript, and two sampled clips. Best view on screen.

Abstract

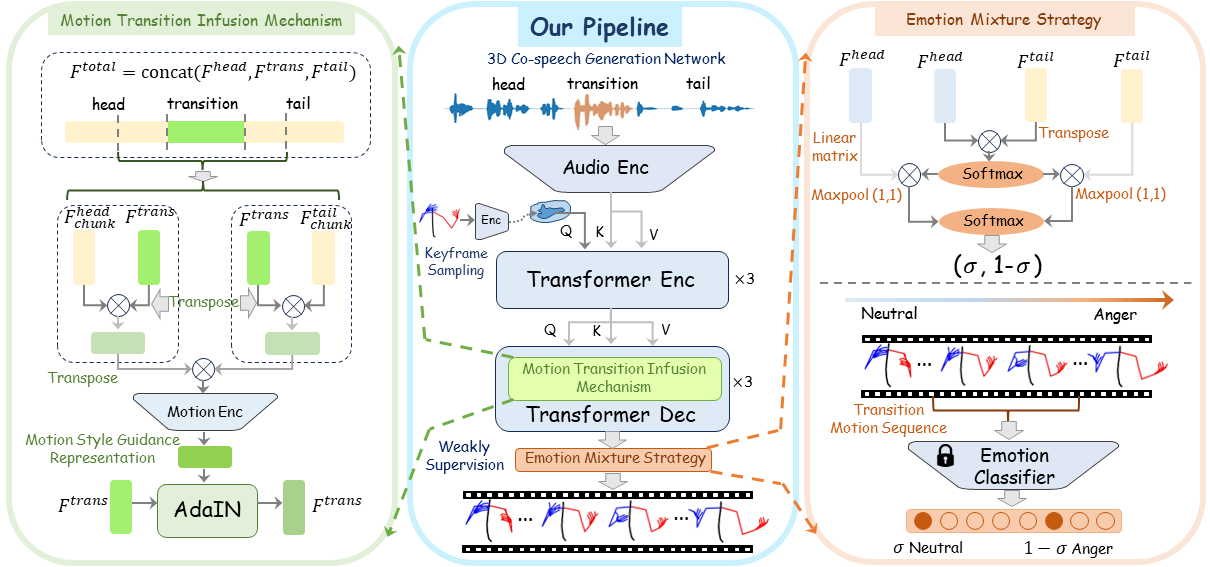

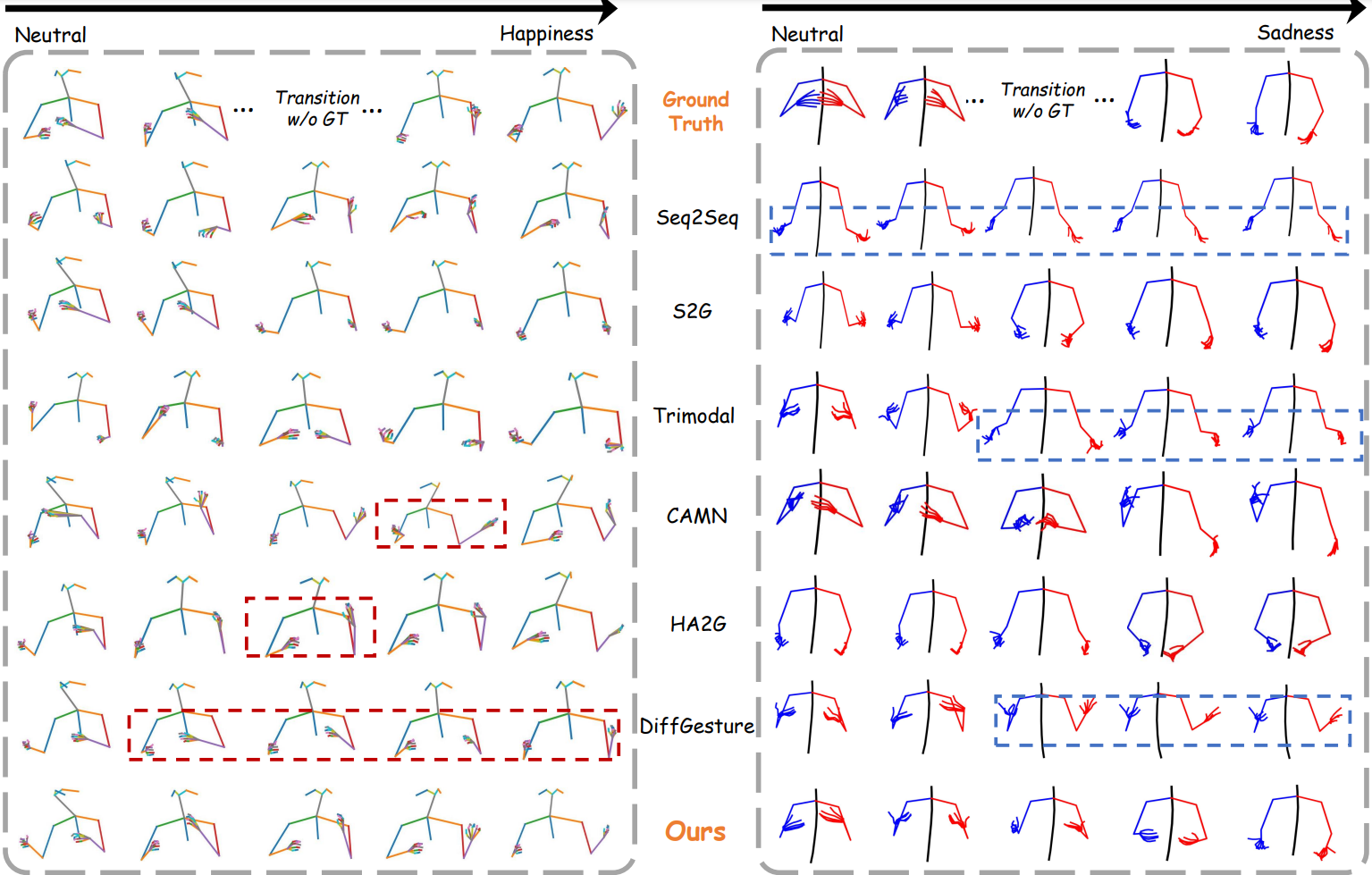

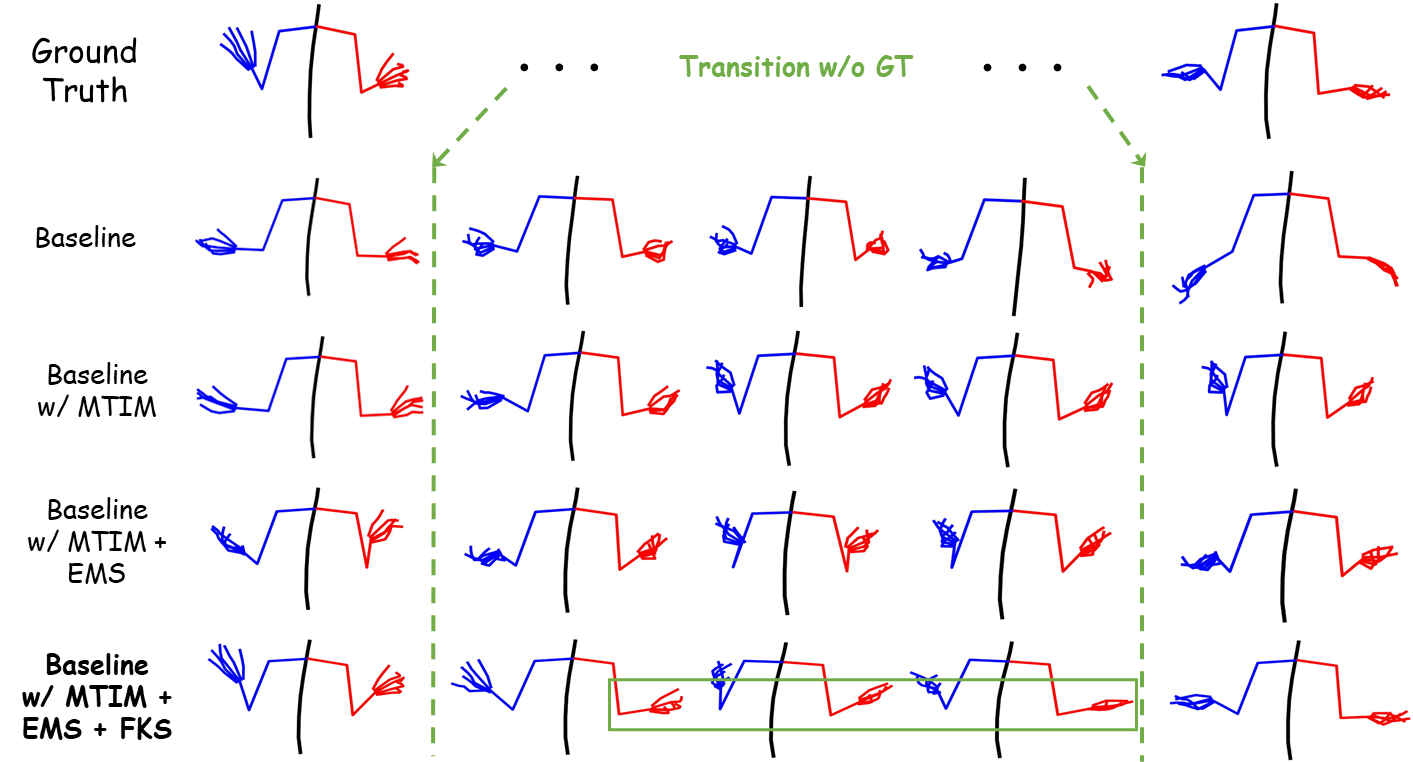

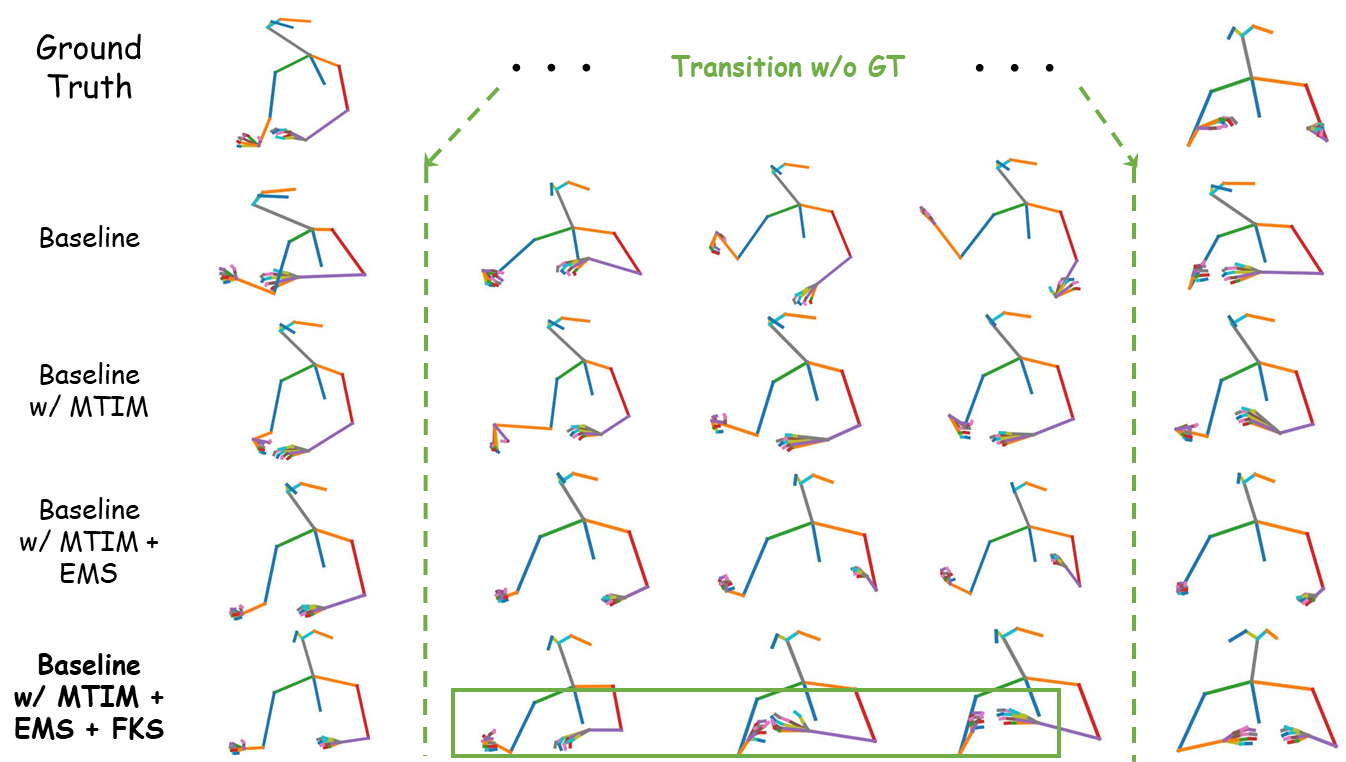

Generating vivid and emotional 3D co-speech gestures is crucial for virtual avatar animation in human-machine interaction applications. While the existing methods enable generating the gestures to follow a single emotion label, they overlook that long gesture sequence modeling with emotion transition is more practical in real scenes. In addition, the lack of large-scale available datasets with emotional transition speech and corresponding 3D human gestures also limits the addressing of this task. To fulfill this goal, we first incorporate the ChatGPT-4 and an audio inpainting approach to construct the high-fidelity emotion transition human speeches. Considering obtaining the realistic 3D pose annotations corresponding to the dynamically inpainted emotion transition audio is extremely difficult, we propose a novel weakly supervised training strategy to encourage authority gesture transitions. Specifically, to enhance the coordination of transition gestures w.r.t. different emotional ones, we model the temporal association representation between two different emotional gesture sequences as style guidance and infuse it into the transition generation. We further devise an emotion mixture mechanism that provides weak supervision based on a learnable mixed emotion label for transition gestures. Last, we present a keyframe sampler to supply effective initial posture cues in long sequences, enabling us to generate diverse gestures. Extensive experiments demonstrate that our method outperforms the state-of-the-art models constructed by adapting single emotion-conditioned counterparts on our newly defined emotion transition task and datasets.

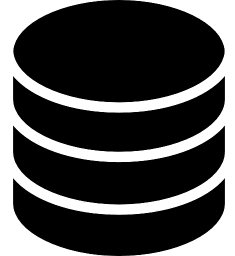

Dataset Construction

Figure 2. The pipeline of dataset construction. Head and tail audios as well as the corresponding transcripts are fed into the pipeline to generate a smooth and high quality transition.

The details involve the following steps:

☆ Segmentation and Emotion Labeling: We first divide the previously aligned single emotion co-speech gesture datasets into head and tail segments by splitting the original audio into 4-second clips. Heads are identified as clips with neutral emotions, while tails contain various emotions.☆ Emotion Transition: The head segments consistently exhibit neutral emotions, while the tails display a variety of emotional states. In our approach, we intentionally avoided pairing segments with extreme emotional shifts (e.g., happiness-to-anger, happiness-to-sadness). .

☆ Transcript Generation with GPT-4: We engage GPT-4 to generate transitional text between the head and tail clips. The GPT-4 is instructed to create a smooth transition in both content and emotion, producing about 5-10 words. For each data sample, GPT-4 generated three candidate transitions, each accompanied by a confidence score, returned in JSON format. We finally discard samples with low confidence or excessive length.

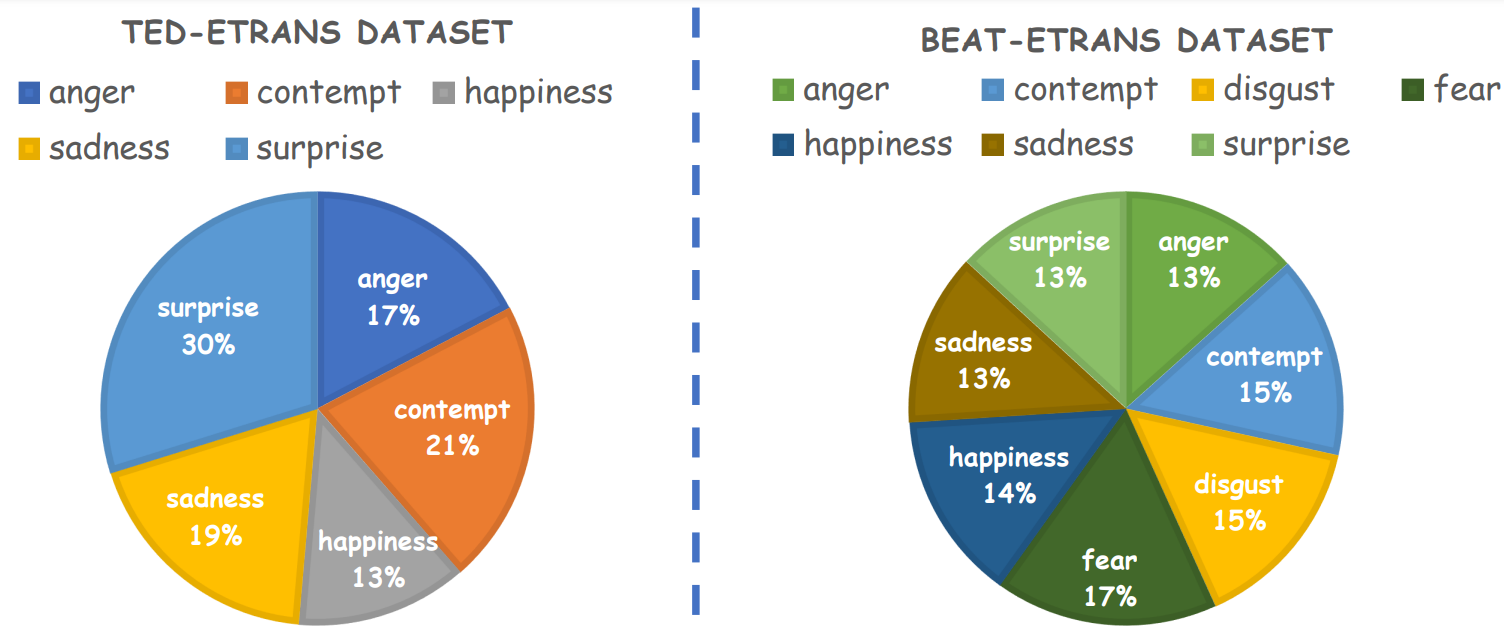

Dataset Statistics

Figure 3. Details of emotion transition distribution of our newly collected TED-ETrans and BEAT-ETrans datasets. All the transitions start from the neutral emotional speeches.

| Dataset | Joint Annotation | Body | Hand | Audio | Text | Speakers | Single Emotion | Emotion Transition | Duration (hour) |

|---|---|---|---|---|---|---|---|---|---|

| TED | pseudo label | 9 | ✗ | ✓ | ✓ | 1,766 | ✗ | ✗ | 106.1 |

| SCG | pseudo label | 14 | 24 | ✓ | ✗ | 6 | ✗ | ✗ | 33 |

| Trinity | mo-cap | 24 | 38 | ✓ | ✓ | 1 | ✗ | ✗ | 4 |

| ZeroEGGS | mo-cap | 27 | 48 | ✓ | ✓ | 1 | ✗ | ✗ | 2 |

| BEAT | mo-cap | 27 | 48 | ✓ | ✓ | 30 | ✓ | ✗ | 35 |

| TED-Expressive | pseudo labe | 13 | 30 | ✓ | ✓ | 1,764 | ✗ | ✗ | 100.8 |

| BEAT-ETrans (ours) | mo-cap | 27 | 48 | ✓ | ✓ | 30 | 8 | ✓ | 161.3 |

| TED-ETrans (ours) | pseudo labe | 13 | 30 | ✓ | ✓ | 1,764 | 6 | ✓ | 59.8 |